sdd-sbcEmbed-v2 (Speaker Detection)

Version Changelog

| Plugin Version | Change |

|---|---|

| v2.0.0 | Initial plugin release, functionally identical to v1.0.0, but updated to be compatible with OLIVE 5.0.0 |

| v2.0.1 | Updated to be compatible with OLIVE 5.1.0 |

| v2.0.3 | Bug fixes, released with OLIVE 5.2.0 |

Description

Speaker Detection plugins will detect and label regions of speech in a submitted audio segment where one or more enrolled speakers are detected. Unlike Speaker Identification (SID), SDD is capable of handling audio with multiple talkers, as in a telephone conversation, and will provide timestamp region labels to point to the locations of speakers when speech from one of the enrolled speakers is found.

The goal of speaker detection is to identify and label regions within an audio file where enrolled target speakers are talking. This capability is designed to be used in files with multiple talkers speaking within the same file. For files where it is certain that only one talker will be present, either because it is collected this way or because a human has segmented the file, speaker recognition (SID) plugins should be used. This release of speaker detection is based on "segmentation-by-classification", in which the enrolled speakers are detected using a sliding and overlapping window over the file. This plugin does not do do "diarization"; it is only searching for regions where the particular enrolled speakers are talking and ignores all non-target speakers. The plugin is based on a core speaker recognition framework using speaker embeddings with PLDA backend and duration-aware calibration. This plugin improves on prior, deprecated technology based on diarization-and-classification using variational bayes diarization followed by scoring of discrete speaker regions. This approach had several major issues, including high use of computational resources, very long and unpredictable run-times (often 10 times slower than real time) and unpredictable performance. This release is average 30 times faster than the previous release, with much greater robustness for a variety of conditions and highly predictable performance.

Domains

- micFarfield-v1

- Domain optimized for microphones at various non-close distances from the speaker, designed to deal with natural room reverberation and other artifacts resulting from far-field audio recording.

- telClosetalk-v1

- Domain focused on close-talking microphones meant to address the audio conditions experienced with telephone conversations.

Inputs

For enrollment, an audio file or buffer with a corresponding speaker identifier/label. For scoring, an audio buffer or file.

Outputs

In the basic case, an SDD plugin returns a list of regions with a score for each detected, enrolled speaker. Regions are represented in seconds. As with SID, scores are log-likelihood ratios where a score of greater than “0” is considered a detection. The SDD plugins are generally calibrated or use dynamic calibration to ensure valid log-likelihood ratios to facilitate detection.

Example output:

/data/sid/audio/file1.wav 8.320 13.110 speaker1 -0.5348

/data/sid/audio/file1.wav 13.280 29.960 speaker2 3.2122

/data/sid/audio/file1.wav 30.350 32.030 speaker3 -5.5340

/data/sid/audio/file2.wav 32.310 46.980 speaker1 0.5333

/data/sid/audio/file2.wav 47.790 51.120 speaker2 -4.9444

/data/sid/audio/file2.wav 54.340 55.400 speaker3 -2.6564

Enrollments

Speaker Detection plugins allow class modifications. A class modification is essentially the capability to enroll a class with sample(s) of a class's speech - in this case, a new speaker. A new enrollment is created with the first class modification, which consists of essentially sending the system an audio sample from a speaker, generally 5 seconds or more, along with a label for that speaker. This enrollment can be augmented with subsequent class modification requests by adding more audio with the same speaker label.

Functionality (Traits)

The functions of this plugin are defined by its Traits and implemented API messages. A list of these Traits is below, along with the corresponding API messages for each. Click the message name below to go to additional implementation details below.

- REGION_SCORER – Score all submitted audio, returning labeled regions within the submitted audio, where each region includes a detected speaker of interest and corresponding score for this speaker.

- CLASS_MODIFIER – Enroll new speaker models or augment existing speaker models with additional data.

Compatibility

OLIVE 5.1+

Limitations

Known or potential limitations of the plugin are outlined below.

Labeling Resolution vs. Processing Speed vs. Detection Accuracy

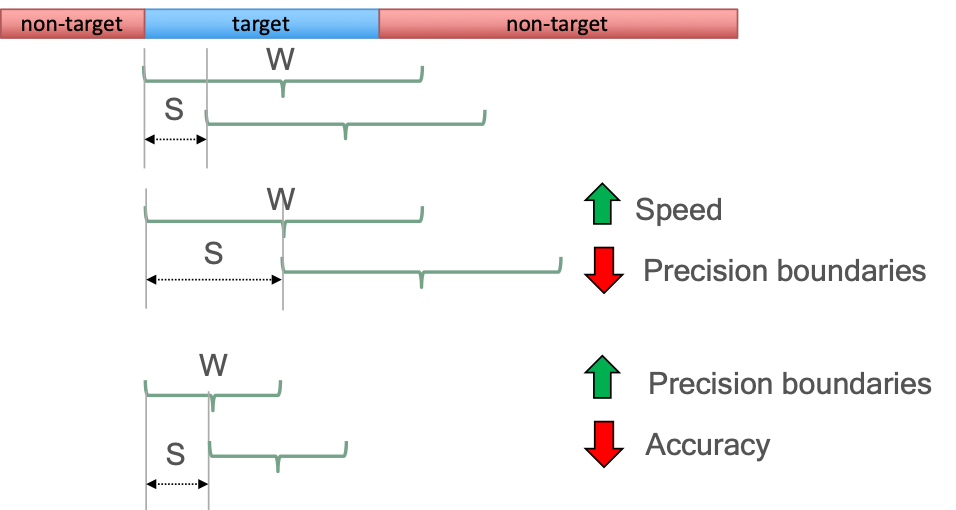

Region scoring is performed by first identifying speech regions and then processing the resulting speech regions above a certain length (win_sec) with a sliding window. Altering the default parameters for this windowing algorithm will have some impacts and tradeoffs with the plugin's overall performance.

Shortening the window and/or step size will allow the plugin to have a finer resolution when labeling speaker regions, by allowing it to make decisions on a smaller scale.

The tradeoff made by a shorter window size, though, is that the system will have less maximum speech to make its decisions, resulting in a potentially lower speaker labeling accuracy, particularly affecting the rate of missed speech.

A shorter step size will result in more window overlap, and therefore more audio segments that are processed multiple times, causing the processing time of the plugin to increase.

These tradeoffs must be managed with care if changing the parameters from their defaults.

Minimum Speech Duration

The system will only attempt to perform speaker detection on segments of speech that are longer than X seconds (configurable as min_speech, 2 seconds by default).

Comments

Segmentation By Classification

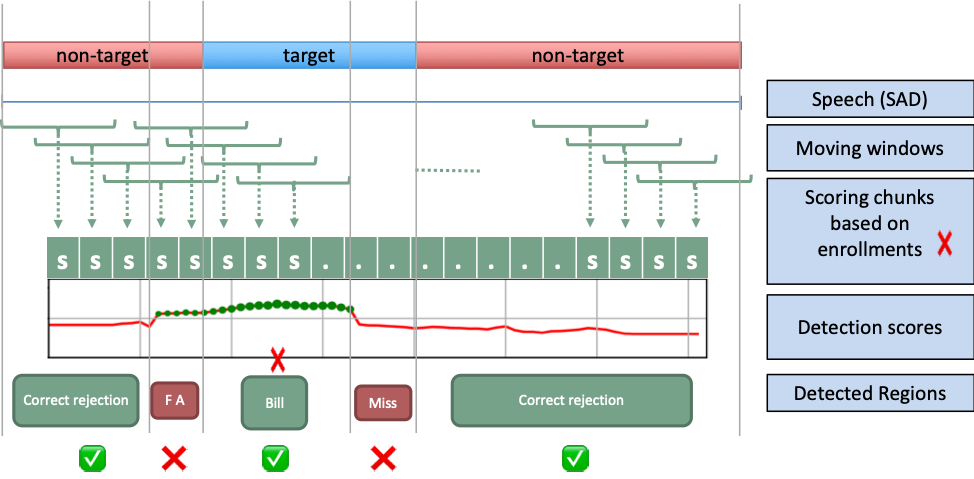

Live, multi-talker conversational speech is a very challenging domain due to its high variability and quantity of speech from many speakers across varying conditions. Rather than exhaustively segment a file to identify pure regions with a single talker (the vast majority of whom are not of actual interest), SBC scans through the file quickly using target speaker embeddings to find regions that are likely to be from a speaker of interest, based on the scores for their enrolled model. The approach consists on a sliding window with x-set steps as described in Figure 1.

Figure 1: Sliding window approach for Segmentation-by-Classification (SBC) plugin

Figure 1: Sliding window approach for Segmentation-by-Classification (SBC) plugin

The first step this plugin takes is to mask the audio by performing speech activity detection. This allows some natural segmentation by discovering breaks between speech sections caused by silence, and allows the algorithm to focus on the portions of the audio that actually contain speech. Any speech segment longer than X seconds (configurable as min_speech, default 2 seconds) is then processed to determine the likelihood of containing a speaker of interest. Speech regions of up to X seconds (configurable as win_sec, default 4 seconds) are processed and scored whole, while contiguous segments longer than this are then processed using the sliding window algorithm shown above, whose parameters (window size/win_sec and step size/step_sec) are configurable if you find the defaults not to work well with your data type.

Global Options

The following options are available to this plugin, adjustable in the plugin's configuration file; plugin_config.py.

| Option Name | Description | Default | Expected Range |

|---|---|---|---|

| det_threshold | Detection threshold: Higher value results in less detections being output, but of higher reliability. | 0.0 | -10.0 to 10.0 |

| win_sec | Length in seconds of the sliding window used to chunk audio into segments that will be scored by speaker recognition. See below for notes on how this will impact the system's performance. | 4.0 | 2.0 to 8.0 |

| step_sec | Amount of time in seconds the sliding window will shift each time it steps. See below for important notes about the sliding window algorithm behavior. A generally good rule of thumb to follow for setting this parameter is half of the window size. | 2.0 | 1.0 to 4.0 |

| min_speech | The minimum length that a speech segment must contain in order to be scored/analyzed for the presence of enrolled speakers. | 2.0 | 1.0 - 4.0 |

| output_only_highest_scoring_detected_speaker | Determines the output style of the plugin, and whether all speakers scoring over the detection threshold are reported for each applicable speech segment (value=False), or only the top scoring speaker (value=True). |

False | True or False |

Additional option notes

min_speech

The min_speech parameter determines the minimum amount of contiguous speech in a segment required before OLIVE will analyze it to attempt to detect enrolled speakers. This is limited to contiguous speech since we do not want the system to score audio that may be separated by a substantial amount of non-speech, due to the likelihood of including speech from two distinct talkers. The parameter is a float value in seconds, and is by default set to 2.0 seconds. Any speech segment whose length is shorter than this value will be ignored by the speaker-scoring portion of the plugin.

win_sec and step_sec

The win_sec and step_sec variables determine the length of the window and the step size of the windowing algorithm respectively. Both parameters are represented in seconds. These parameters affect the accuracy, the precision in the boundaries between speakers, and the speed of the approach. Figure 2 shows an example on how the modification of size of the window (W) and the step (S) affect those factors.

Figure 2: Example of changing the win_sec and step_sec parameters and how this influences the algorithm speed as well as the precision and accuracy of the resulting speaker boundary labels

Figure 2: Example of changing the win_sec and step_sec parameters and how this influences the algorithm speed as well as the precision and accuracy of the resulting speaker boundary labels

output_only_highest_scoring_detected_speaker

The boolean output_only_highest_scoring_detected_speaker parameter determines the format of the output by the plugin. If output_only_highest_scoring_detected_speaker is set to False, the plugin will report all the speakers above the threshold for a given segment. However, if output_only_highest_scoring_detected_speaker is set as True, the plugin will report only the speaker with the maximum score for a given segment even when multiple speakers have scores above the threshold. An example of this behavior distance follows.

If we have a segment (S) with scores for three different speakers previously enrolled,

S spk1 10.8

S spk2 8.2

S spk3 3.1

and the threshold is 5.0, then with output_only_highest_scoring_detected_speaker = True, the system reports:

S spk1 10.8

However, with output_only_highest_scoring_detected_speaker = False, the system reports:

S spk1 10.8

S spk2 8.2

The default behavior of this plugin is to have this parameter set to False and to report all speaker detections over the detection threshold for each region.